i test in prod.

I recently acquired this “i test in prod.” t-shirt from the fine folks at Honeycomb. I was excited to get it because it’s a little bit exclusive. But also because I do test in prod, and it’s powerful.

When I started following Charity Majors on Twitter, I was initially startled by the responses when she’d say to test in prod. Before I knew this idea was not fully accepted, I knew by experience (and a little luck) about the power of prod environments to test changes. Since I know better now, I want to share some of my experiences where testing in production did (or would have) helped.

What I didn’t know then

Back in 2015, I was working on a performance-sensitive change. When I started instrumenting the code I was working on, I noticed that a lot of time was being spent before the code I was interested in even ran.

As I pulled on that thread, I discovered that an unreasonable amount of time was spent loading dependencies. This code ran on a homegrown platform, so the dependency loading was homegrown. Each shared dependency prevented itself from being re-executed. But the files containing each dependency were loaded and parsed each time they were named as a dependency. As a result, common dependencies were read from disk and parsed up to dozens of times.

The fix to this was pretty straightforward. Each time a dependency is declared, check if that has already been loaded. If not, load it and mark it as loaded; otherwise do nothing.

Although the fix was straightforward, it still made folks nervous. The change was going to affect virtually every hit to the system. The fix sat in a staging environment for weeks. Every one-off glitch in that environment came my way as potentially being related to the change.

The doubts were understandable but frustrating. I was confident that the change would have significant positive impacts for users. I knew the change was straightforward. With the help of a supportive colleague, I steeled myself for potential disaster and pushed the code to production.

CPU load on the web tier dropped 35%. Average backend processing time dropped 100ms.

Obviously this was a best case scenario. However, nothing significant happened to increase confidence in deploying the change after the first couple days in the test environment. Energy was wasted on angst, and users didn’t get the drastic performance benefits.

For better or worse, the only way to find out what was going to happen was to put the code in production. With the benefit of hindsight, it would have been better to do a phased release to build confidence incrementally. When this happened, I didn’t have the context to recognize that’s what I needed.

Science!

Fast-forward a couple years. I was working on a critical path workflow in old code with no tests. The code needed a refactor of a core processing loop to support a new use case.

The conclusion I came to is that this is what happens when applying @mipsytipsy’s “test in production” mantra. Take the momentum of production systems and apply small forces in the right places to redirect it into ways of making those systems better.

— mjobrien (@mjobrien) July 27, 2019

Fortunately, a colleague had recently mentioned the scientist gem from GitHub. Even though I wasn’t working in Ruby, I had everything I needed to apply the concepts. The output from the code needing a refactor was cached, and I had logging capabilities.

I wrote a refactored version the core processing loop. Then I added instrumentation so when a cache hit was encountered, a sampling of requests was run through the rewritten core loop. The output of the new core loop was compared to the cached data, and the results were logged. With this in place, I was able to quickly iterate and fix problems.

Testing in production made this work virtually stress-free. The release of the refactored code was done without fear. One or two minor bugs came up after the fact, but overall this approach was a huge success.

It would have been infeasible to do this work without comparing to production hits. If you were dead-set against testing in production directly, you might be able to pull hits from the logs and build up a test suite to run on the side. That would have taken at least as much work for a less dynamic testing range.

Second time’s the charm

So far I’ve gotten a little lucky without testing in prod and succeeded by planning to test in prod from the start. I’ve also gotten to talk about two career highlights while hyping testing in prod.

Unfortunately, I have also used testing in prod to bail out a failed project.

The homepage for the product I was working on was dated, so the marketing team did a big project to create a redesigned home page. The new design looked better, worked better across devices, and was generally better. The launch date was set, testing was done, and we had a big bang launch.

Then the support calls started rolling in. People were struggling to log in. Password resets were through the roof. Before long, a rollback was called.

The redesign removed a login form that had been on the homepage for seven years and replaced it with a login button. This made for a cleaner design and generally better user experience. However, the piece that was missed in testing was that the new login process caused browsers not to autofill the login form. Users who relied on that feature to log in were stuck; especially the ones who were so reliant that they didn’t recall their username.

I was not involved in the original launch, but I got pulled in to make sure things were right for the relaunch. I studied browser autofill behaviors. I wrote detailed notes for QA and support to understand the use cases. And we did lots more testing.

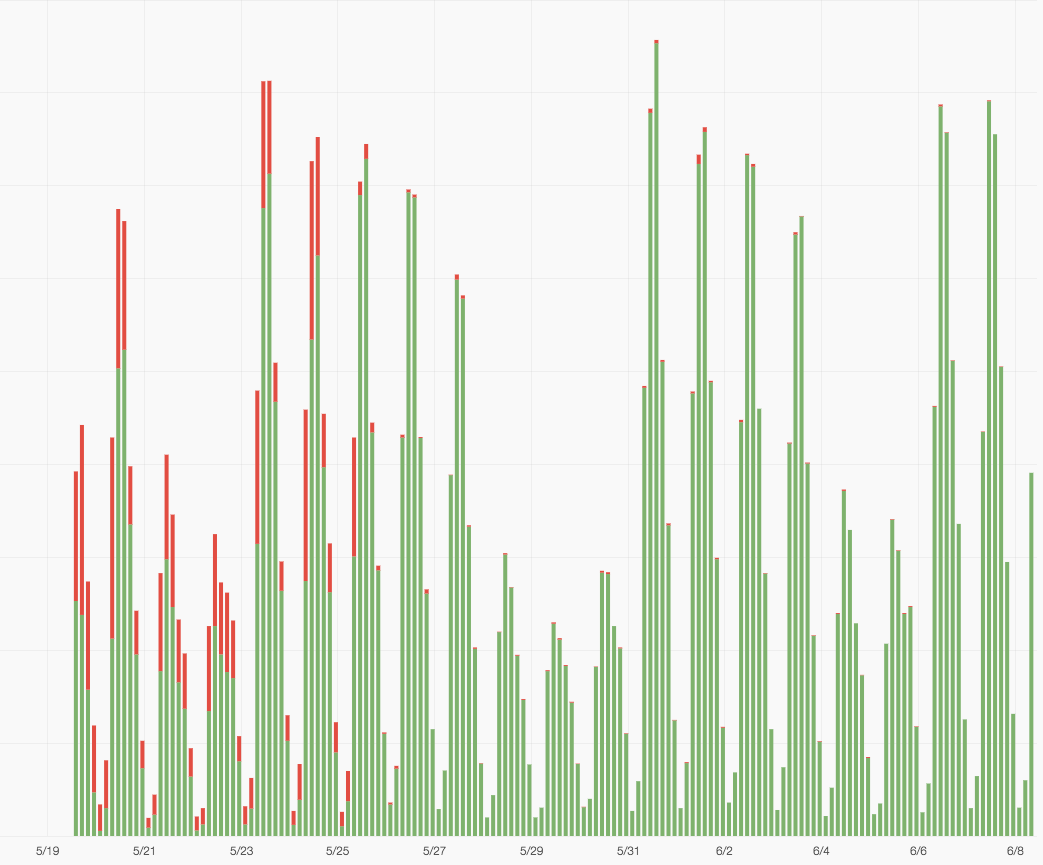

Most importantly, I set things up to guarantee success when relaunching the new homepage. I split the existing homepage so that it could serve either the login form or the login button. After we’d done our internal tests, we slowly ramped up serving a percentage of hits with the login button instead of the form. Once the old homepage was serving a login button 100% of the time, we could confidently relaunch the new site by ensuring the links pointed to the same URL.

Now you try

I carry a burden of knowledge that makes it hard for me to imagine not testing in prod. I’ve learned a lot over the past couple years about the possibilities that are unlocked by taking testing in prod to its logical end. A robust feature flagging setup can enable continuous delivery where staging environments are a thing of the past.

But it doesn’t require paid tools or generalized libraries to test in prod. The examples I’ve described were implemented with simple conditionals and random number generation. If you can deploy to production relatively easily, you can probably test in prod. (If you can’t deploy to production relatively easily, start fixing that today.)

I test in prod. How could you?